Useful Models

Models Useful for High Reliability Organizing

Models and programs can help bring structure for thinking and acting. In stable environments, when variation endangers the program, these adjuncts help organizations succeed. However, several dangers exist when we use programs developed for other organizations or from other environments. We may not have transferred necessary characteristics as hidden rules may have been missed when they were melded to traits of the originating organization. That is, they are so closely wedded that we do not distinguish them as separate or recognize them as necessary. We can also become lost in the metaphor and the concrete presentation of abstract thought. At these times rules become reductive and their reason for being lost. Or we may lose the nuance that led to the model’s success in other environments.

When models and programs become products and replace adaptive thinking they create rigidity or a reliance on non-adaptive structures. Consequently, complexity or dynamic environments will lead to crisis if not catastrophe from which the organization may not recover.

The following are models for quality, safety, and reliability that interact with or contribute to high reliability. The descriptions are only to introduce the concepts. The reader is referred to primary sources for more information.

Useful Models

Lean (Toyota Lean)

During recovery from World War II, the automobile manufacturer Toyota developed the Toyota Production System, a system that describes a more effective and efficient organization over time compared to similar organizations. This would produce a lean organization (absence of fat) which became Toyota Lean or Lean in the 1980s. Their primary orientation is to give value to the customers and build sustaining relationships. In the 1970s-80s American industry became fascinated with Japan’s quality circles and job security.

A Lean organization considers people as more valuable than machines. This respect for people builds relationships inside and outside the organization. The organization relies on the resulting highly motivated people within their company because such employees will more likely identify a problem and speak up to fix it. This is comparable to the HRO’s deference to expertise. While it was initially thought that the Japanese high morale and distributed decision ensured productivity it was later found not to be the case.

Lean organizations constantly look for ways to improve, commonly by maintaining vigilance for problems much as an HRO would with pre-occupation with error. The Lean organization uses flow for its processes rather than batch steps, a source of the Just-In-Time delivery method that replaces warehousing or stockpiling materials. Identifying obstructions to flow will uncover sources of waste and identify areas for improvement.

Kaizen, a term used in Lean, refers to a Japanese philosophy of improvement through all aspects of life. Kaizen is the process Lean uses to improve the system and give value. Value, relationships, motivation, and flow make a Lean organization. Value is defined by the customer; Toyota would study and provide that value. An organization that uses Lean understands that it is selling value and must add value to the product or process.

Resilience Engineering - Understanding what goes right

The development of socio-technical systems has created a predominance of knowledge intensive activities and cognitive tasks. In response to the idea that accidents, an effect, must have a cause (the cause-and-effect principle), Eric Hollnagel developed the concept of cognitive systems engineering, that we cannot understand what goes wrong if we do not know what goes right. He has taken this further into socio-technical systems to study how humans and technology function as joint systems. Study of the normal variability is the beginning of safety studies. This is the basis for his Functional Resonance Accident Model (FRAM) where accidents occur when resonance develops between functions of a system’s components to create hazards that led to the accident. The premise for FRAM is that performance is never stable so the system is expected to have internal and external variability. If performance variability is the norm, we should then study what makes the system work – what goes right.

Six Sigma

Believing that reliability comes from consistency, Motorola developed this approach for internal quality of control and to increase their return on investment. They found that, because of the complexity of their products, the level of 3.4 defects per million products was a small enough number of defects that this exceptional performance dramatically reduced complaints about their products. GE then adopted and successfully used the system in the 1990s.

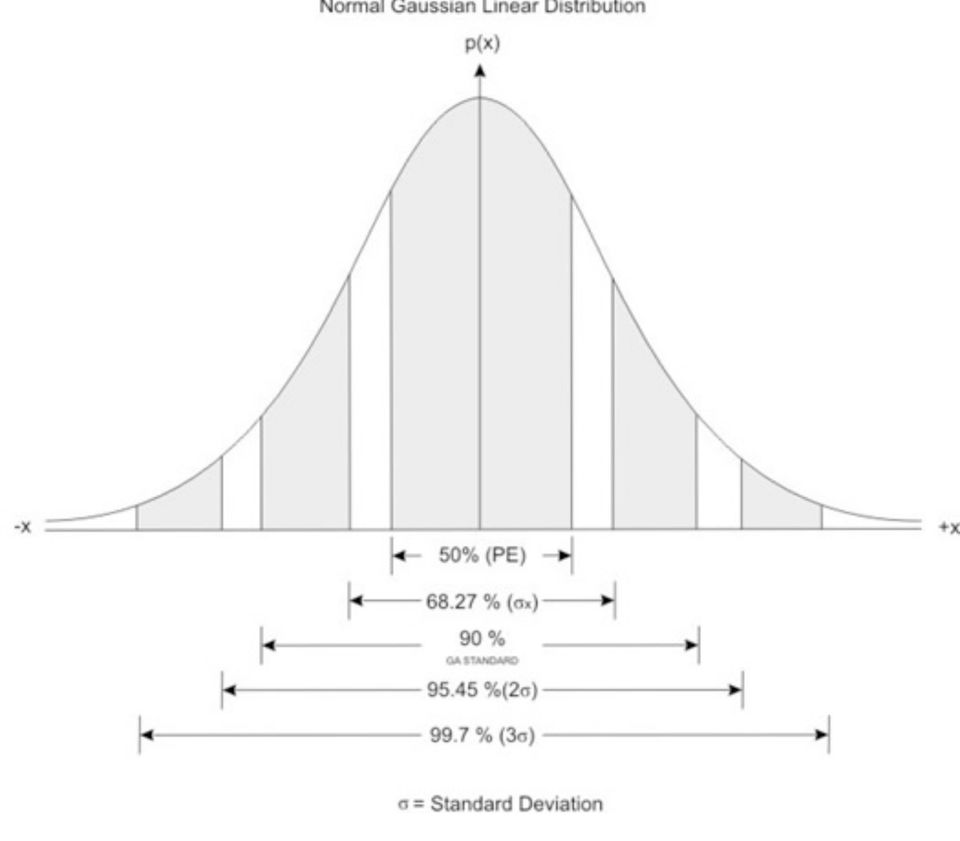

Six Sigma (6σ) is a statistical term that refers to 3.4 occurrences per one million events. Statisticians use sigma (Greek letter σ, represents a standard deviation) to measure how a sample deviates around the mean (average or norm). Through a mathematical equation the distance between sigmas (standard deviations) on the X-axis is the same but the area under the curve changes. The area under the curve represents the number in that portion of the sample. When graphed it forms a curve called a “normal curve” (the technical name for this is the Normal Gaussian Curve or Linear Distribution, see graph below).

Six Sigma describes the area of the curve that is six standard deviations from the mean or 0.000 34%. Because of the nature of the curve, while six sigma is 3.4 occurrences per one million events, five sigma is 233 occurrences.

It has the assumption that risk and errors develop from variation in processes which will propagate through the system to reduce the quality of the product. The organization will then benefit by identifying and controlling variation in those processes that most affect performance and profits.

In mechanical processes inputs, transforming processes, and outputs are related in a deterministic manner with each directly determining the outcome. Cause and effect are direct and have great importance in identifying where to intervene. If you determine the cause of the problem you can fix it. The cause will be from variation (deviation from the expected) in the process that results from error.

In Six Sigma, variation, or error, is to be identified and mitigated. It is represented on the normal curve as wider than desired curve. A wide curve represents a larger number of products or processes in the sample that vary from the expected. This variation prevents parts of the system from working with each other just as you would see if people came to work with different languages, training, clothing, and expectations.

To identify and reduce variation, Six Sigma programs will analyze root cause and develop corrective action with a program called for Define-Measure-Analyze-Improve-Control.

Crew Resource Management (CRM)

In 1978 a commercial jet with a well-trained crew ran out of fuel and crashed killing 10 of the 189 people on board. The NTSB identified a recurring problem in air crashes, “a breakdown in cockpit management and teamwork during a situation involving malfunctions of aircraft systems in flight .… because their attention was directed almost entirely toward diagnosing the landing gear problem.” Also contributing were the failure of the captain to respond to the crewmembers’ advisories and the failure of the other two flight crew members to successfully communicate their concern to the captain.

NASA then studied the question of how an experienced, well-trained crew can run out of fuel in flight. This occurred, basically, because the pilot refused to take recommendations from junior officers and narrowed his focus to a single problem when under stress. The corrective program NASA developed became Cockpit Resource Management. Because of problems identified in the 1990s with communication between crew on the flight deck and passenger areas, it became Crew Resource Management.

CRM is designed to avoid, trap, and mitigate error through communication, leadership, and decision making. The original problem involved extreme authority gradient and coning of attention under duress for which CRM works well. Its success derives from a change in values, beliefs, and behaviors.

Because changes required for CRM cannot be imposed on people it will fail when the professional does not accept and internalize the approach. The officer can perform well in training and testing but revert to maladaptive behaviors in flight or under stress.

Others who use CRM inadvertently reduce it to methods of communication (almost by checklist) and change in a few behaviors. This misses the methods onerous leaders have of maintaining authority gradient such as intimidation by countenance or tone of voice. It also ignores the difficulty of changing behaviors that have major social ramifications. These subtle or nuanced culture changes may be the source of CRM’s success.

Operation Risk Management (ORM)

Operational Performance through Operational Risk Management and Crew Resource Management

Randy E. Cadieux, Major, USMC, MS, United States Marine Corps

Operational Risk Management (ORM), a tool applied by Navy and Marine Corps units, mitigates risks associated with tactical and non-tactical operations. As any High Reliability tool, it is also used in off-duty activities. Because of its use outside the tactical environment, ORM can be useful to other organizations by reducing operational risks to their personnel and equipment. Its use can raise the awareness of risks by the organization’s leaders which will influence and improve the safety attitudes of members within the organization. ORM may be viewed as an extension of other types of system-oriented hazard analysis techniques.

Navy and Marine Corps aviation units apply ORM to aviation operations at multiple levels. The ORM planning process attempts to mitigate operational risks through hazard analysis and risk assessment, risk control implementation, and supervision at the most appropriate level. ORM can also be thought of as a tool for mitigating risks associated with operations which involve human interaction with systems and equipment.

ORM involves the analysis of hazards which affect the operational phase of systems and the development of controls to reduce risks to personnel and equipment during operations. Application involves a 5-step process of hazard identification, hazard assessment, making risk-based decisions on hazard controls, control implementation, and supervision of the results. Initially, hazards are identified and then assessed in terms of probability and severity. This assessment results in the generation of Risk Assessment Codes (RACs). The first RACs are known as Initial Risk Assessment Codes.

After identification of Initial RACs risk control implementation is developed to reduce risk levels in a prioritized fashion, working from the highest to lowest risks. These controls include interventions to reduce the probability of occurrence, the severity of impact, or both. Oftentimes operational risks are controlled through the implementation of training, tactics, or procedures (including standardized checklists), other procedural or administrative controls, such as Standard Operating Procedures, the adoption of Personnel Protective Equipment policies, and the use of Warnings and Cautions to raise personnel awareness of the potential for hazardous situations. After the risk controls are implemented the risk analysts determine the Final Risk Assessment Codes based on the probability and severity of hazard occurrence after control implementation.

Decisions about mission execution based on this residual risk (risk decisions) are made at the appropriate level within the organization, an important principle of ORM. At the lower end of the spectrum, risk-based decisions can be made by the operator and, as risk levels escalate, the level of the decisionmaker increases. Decisions about operations with the highest Final RACs are normally made by those in command of a unit. These decisions about operations must take into account the mission and acceptable levels of risk. The goal of ORM is not to eliminate all risks, but to manage risks for mission accomplishment with minimum amount of loss. In other words, ORM helps to reduce risks to acceptable levels considering the mission and operational environment.

There are three types of ORM: Deliberate, In-Depth, and Time-Critical. Deliberate and In-Depth ORM are used when there is adequate time to analyze hazards and often use qualitative and quantitative analysis tools. Oftentimes brainstorming, what-if scenarios, subject matter experts, and lessons-learned are used to identify hazards and then rank the hazards in terms of probability and severity. Time-Critical ORM is a method which shortens the analysis and decision making process to counter threats or ameliorate hazards during time-compressed situations. Decisions can be made by rapidly assessing the situation, balancing options, communicating intentions to crew members, taking immediate action, and then debriefing the action. This is known as the A-B-C-D decision making framework (Assess, Balance, Communicate, Do/Debrief).

In addition to the three types of ORM, four Principles of ORM exist to help guide decision makers. These principles are 1) Accept Risk when Benefits Outweigh Cost, 2) Accept No Unnecessary Risk, 3) Anticipate and Manage Risks by Planning, and 4) Make Risk Decisions at the Right Level. These principles help to guide ORM facilitators and unit leaders during the ORM process.

Navy and Marine Corps aviation has demonstrated that Operational Risk Management assists with the discovery of risks and with elevating risk decisions to the appropriate levels of leadership. ORM helps leaders foster a culture of risk awareness, which has a positive effect on safety attitudes in the unit. Through the proper use of ORM, units can reduce risks to acceptable levels for a given situation and, when risks outweigh the perceived benefits, top leadership has a decisionmaking tool to identify better courses of actions for high risk operations. Time-Critical ORM has shown to be a valuable tool to aid pilots, aircrew, and ground units in the reduction of risks and in making rapid risk-based decisions in dynamic environments.

Human Factors

Human Factors, earlier known as human engineering or ergonomics, is concerned with the design of machines to facilitate their interactions with people. It is about human-machine interface. Decentralized decision making and focus on the work group over the individual as personnel were not isolated atoms but work together. Human factors engineers have engineering psychology as background with human/machine interface where human being can be isolated.

Human Factors studies originated after WWII, particularly after failures form poor interfaces between humans and machines. Failures could be found when there was no feedback between consequences of design (which affects the consumer) and the designer. Design engineers pushed what could be done and surpassed the ability of the individual’s performance

Human Factors has a broad meaning as an interdisciplinary profession studying the interfaces between humans and machines. It applies a blend of engineering and behavioral sciences to solve complex human – machine interface, human – complex systems control, human to human communications, and simulators. HF covers individual, team, and now organizational performance.

This also uses the Public Health model of accidents, that human performance vacillates (also the basis of FRAM in Resilience Engineering) and events will produce alternating task underload (complacency and dulling of skill) and task overload (overwhelming). That is, there will be long periods of inaction with burst of action

Mindfulness

From Mindlessness to Mindfulness

Langer, Ellen Langer

Information may be true independent of context, such as gravity but most information we use relies on context to interpret and use. Fair skin on a person has no intrinsic value if we discuss winter clothing but has a great deal of value when discussing sun exposure in summer. To treat information as context-free, that is, independent of circumstances, places us at risk for mindless thoughts, decisions, and behaviors. Placing information within context leads to mindfulness.

Mindlessness develops from

automatic behaviors, repetition, and use of a

single perspective.

Automatic behavior and repetition have a negative influence on our thinking. Though they both allow us to perform several tasks simultaneously, they move our attention toward the structure of the situation rather than content. Structure then gains an inordinate influence on thought than content and context. This also develops when we act from a single perspective or point of view. We may not solicit alternative ideas or we request specific help rather than the more generalized “Can you help me?” As stated by George Patton, GEN, US Army, “Never tell people how to do things. Tell them what to do and they will surprise you with their ingenuity.”

Mindlessness develops from the pursuit of

outcome over process and the

belief in limited resources. Pursuit of outcome, “Can I?” or “What if I cannot?” creates an anxious preoccupation with success of failure at the expense of exploration and creativity. Decreased creativity contributes to belief in limited resources, “What can they do to help me?” More common with novices in high risk situations is the fatal belief “If I have not seen it then it cannot happen.”

Mindlessness develops when we disregard the

power of context

and become

trapped by categories. The power of context can impede information flow when we discount information because of who is giving it to us. We may consider information only as an answer to a question. Almost like functional fixedness (a wrench functions as a wrench and cannot be used as a hammer), without the specific question the information has no value. We omit other points of view and other ways to look at a problem are lost. We create categories with distinctions that may or may not be artificial or subjective but which lead us to use rigidly for problem solving.

Mindlessness has costs to individuals and the organization. A focus on outcomes will

narrow our self-image to a limited number of things we do. We accept our labels, “I am just the medical student,” “I am just the firefighter,” and do not see that we have the qualifications to identify, report, or engage a problem or situation. Becoming trapped by categories also permits us to

incrementally change the way we act which can result in normalization of deviance or drift in quality, safety, and reliability. In one form, it results in unintended cruelty, “If you do not understand what I ask, you are not smart.” In another serious form it

creates loss of control when we attribute failure or crisis to a single cause. Fixing that cause does not resolve the crisis or make the problem go away. We give up or accept the new circumstances as inevitable.

Nature of mindfulness; applications in creativity and work.

Mindfulness welcomes new information and focuses on the process used rather than on the outcome.

Worry about the outcome of a child’s behavior, house fire, military battle, or investment can lead one to automatic behavior that rigidly follows rules and draws on only one’s own or an authority’s perspective. However, focus on process can increase one’s control of the situation and help us change our interpretation of the context we experience. Multiple points of view enhance our efforts and how we view the situation. Mindfulness then develops.

New information, process, and multiple points of view lead to creativity and innovation, which contribute to the state of mindfulness. Situations that are novel to the beginner as benefit from mindful thinking as will situations novel to the expert. This occurs because novel situations do not always respond as expected. Planning may fail and even endanger people as the organization rigidly follows the plan in a newly emerging or unexpected situation.

Mindfulness and focus on process make the work more absorbing and pleasurable but most of all they give satisfaction. Pleasure and happiness are passive emotions that happen to us while satisfaction involves an active pursuit. Satisfaction results from adapting to a new situation or solving a novel problem and occurs in a different part of the brain than happiness or pleasure. Mindful learning and problem solving are more likely to bring satisfaction and self-motivation.

In dynamic states mindfulness develops a new dimension. Anticipation has greater effectiveness and focus on process, rather than outcome, leads to containment of the situation as a goal of the individuals.

Langer, Ellen J. Mindfulness. Cambridge, MA: De Capo Press, 1989

Langer, Ellen J. The Power of Mindful Learning. Cambridge, MA: De Capo Press, 1997

Models and programs can help bring structure for thinking and acting. In stable environments these adjuncts can help organizations succeed as variation may endanger the program. Several dangers exist with use of developed programs. We can become lost in the metaphor or the concrete presentation of abstract thought. This is when rules become reductive. Or we may lose the nuance that led to the model’s success in other environments.

When models and programs become products and replace adaptive thinking they create rigidity or a reliance on non-adaptive structures. Consequently, complexity or dynamic environments will lead to crisis if not catastrophe from which the organization may not recover.

The following are models for quality, safety, and reliability that interact with or contribute to high reliability. The descriptions are only to introduce the concepts. The reader is referred to primary sources for more information.

Recognition-Primed Decision Making

This is a model described and codified by Gary Klein to explain how people make quick and effective decisions. The decision maker generates actions based on past experience or training then selects one most likely to succeed. This can occur rapidly and below the level of conscious thought. Unusual or complex situations, however, can lead to error. This model describes the difference in speed of decision making between experienced and novice decision makers as well as the need to understand experience.